Is DLAA actually worth using, though? I tried two of its most recent implementations, in No Man’s Sky and Deep Rock Galactic, and found that any image quality benefits come at a sometimes hefty performance cost – even on one of the best graphics cards. Then again, because DLAA requires a muscular GPU in the first place, the frames-per-second difference might not even be noticeable unless your monitor has an esports-grade refresh rate. Here’s everything you need to know about Nvidia DLAA, including how exactly Nvidia’s AI does the heavy lifting, and which games can use it. You’ll find my quality comparisons and performance benchmarks a wee scroll further down.

What is DLAA and how does it work?

Explaining DLAA is much like explaining DLSS, because they both rely on the same AI system. The work actually starts over at Nvidia HQ, where a supercomputer feeds extremely high-res images into a neural network – essentially, an AI model. These images teach the AI how to accurately predict the content of upcoming frames, which in turn generates anti-aliasing algorithms that allow for rendered images with more detail and more intelligently-placed pixels. The AI model, including its latest learnings, is then sent to your RTX graphics card via driver update. With both DLAA and DLSS, the GPU can apply these AI-powered algorithms when running a supported game, theoretically producing a sharper image than other, dumber AA systems. The big difference is that DLSS involves upscaling, so games are first rendered at a lower resolution than your monitor’s, then pieced back together to resemble native res while also including that AI anti-aliasing. With DLAA, games are simply rendered at native res to begin with. It’s much more of a ‘simple’ anti-aliasing option in this regard, even if getting there does require a supercomputer and some machine learning. On paper, this should allow DLAA to look slightly sharper than DLSS, as all other things being equal, it will always use a higher rendering resolution as a starting point. However, it also means DLAA gives up the performance advantage of DLSS; the latter’s upscaling process isn’t very GPU-heavy in itself, which together with the less demanding render resolution means games will simply run faster with it. It’s also worth mentioning that Nvidia’s upscaling is very, very good: on DLSS’ Quality and Balanced modes, it usually looks as good as native, if not even a little better. It’s all very sciencey, which I know isn’t massively interesting to every PC owner. But the long and short of it is that DLAA is intended to boost the visual quality of games that can already run smoothly without upscaling, giving RTX graphics card owners the choice of digging into that extra FPS headroom in exchange for prettier anti-aliasing.

Which games support DLAA?

Not many, in truth. DLSS support might be approaching the 200 games mark but as of June 2022, the newer and more specialised DLAA only works in a relative handful:

Chorus Deep Rock Galactic Farming Simulator Jurassic World Evolution 2 No Man’s Sky The Elder Scrolls Online

Unofficially, Deathloop can use DLAA as well. In the game’s eyes, it would be running DLSS while rendering at native resolution, but since they use the same algorithms, that’s all DLAA really is. Setting it up is also more involved than clicking a single toggle: you’d need to enable DLSS, set it to use Adaptive Quality, set the target frame rate to 30fps and finally flip on the Quality setting. Weird, innit. You could also, potentially, attain a DLAA-like effect in a wider range of games by combining DLSS with DLDSR (Deep Learning Dynamic Super Resolution). Another piece of Nvidia imaging tech for RTX cards, DLDSR is a downsampler that can improve image quality by rendering games above native resolution, and because it works at the driver level it doesn’t require per-game implementation. By mixing DLSS upscaling with DLDSR downsampling, you could get the former’s AI anti-aliasing with an internal render resolution that balances out to match your monitor’s native res. DLAA, basically. It’s a total bodge job, and requires at least some manual arithmetic to end up at the correct resolution, but does work if you select the right combination of settings. If all that doesn’t appeal, you’ll have to stick with the games listed above. Also, just for the curious: no, you can’t have DLSS and DLAA enabled at the same time.

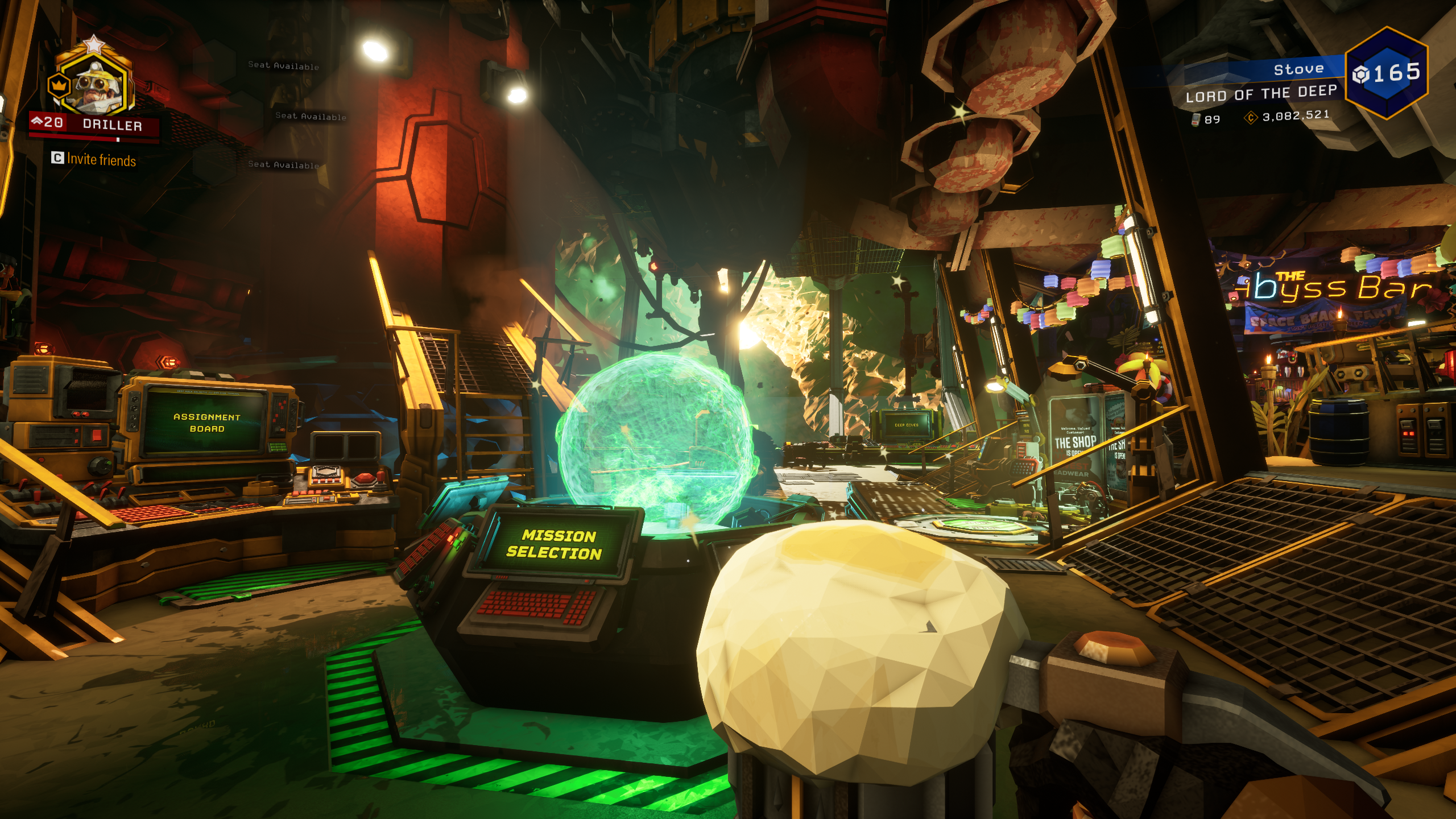

What does DLAA look like?

In pure image quality terms, DLAA does make good on its promise of higher image quality – just. In the galleries below, you can see how DLAA compares to the highest-quality DLSS setting, as well as native/TAA, across No Man’s Sky and Deep Rock Galactic (remember you can click the pics to embiggen them). It’s not a completely perfect form of anti-aliasing, as the occasional jaggies are present, if not particularly pronounced: on that circular ship engine in No Man’s Sky, for instance, which even basic TAA seems to do a better job of smoothing out. But then, TAA’s whole deal is blurring out details to hide jaggies, which has the clear downside of… blurred details. DLAA therefore looks a lot sharper overall, and especially in motion. Even if you can’t see it in these screenshots, tricky environmental elements like mesh grates and distant staircases don’t shimmer as much with DLAA as they do with TAA. Or, for that, matter, DLSS. Both Nvidia technologies naturally look very similar as they’re using the same machine-learned AA, though in the smaller details, DLAA can look a touch more polished. A zoom in on our spaceperson’s space backpack, for instance, highlights a sharper, more in-focus look to the textures and edges. And it’s not just close-up details where you can see the difference. Over in DRG, a distant monitor displays noticeably clearer text using DLAA: Again, TAA proves better at reducing the jagged effect, but only at the cost of making the whole scene look a little less in-focus. To me, at least, it’s worth giving that up in exchange for DLAA’s overall better detail reproduction. On this grated ramp, TAA even seems to chip away bits of the metal, whereas it’s all rendered and presented properly with DLAA. I don’t want overstate the jagginess of DLAA, either. In motion, it looks fine, and that shouldn’t be a surprise to anyone who’s happily run DLSS as the anti-aliasing is basically the same. It’s just the higher starting resolution that DLAA can leverage for a modest, but real, quality improvement.

How does DLAA perform?

Since DLAA lacks upscaling, it was never going to improve performance like DLSS could. But maybe with all its AI smarts, it could impose less of a performance tax than old-timey anti-aliasing? No such luck. In both games tested, and regardless of native resolution, DLAA was consistently the harshest on frames-per-second. And this was with an Nvidia GeForce RTX 3070, one of the mightier models among the compatible RTX range. Granted, it was a sub-10fps difference from TAA at 4K, and even with both games’ Ultra graphics presets engaged, the RTX 3070 never wanted for high frame rates at 1440p and 1080p. If anything, these results show the exact conditions that Nvidia had in mind for DLAA: when performance is so already so high that it doesn’t matter much if you knock a few frames off for its image quality enhancements. Indeed, Deep Rock Galactic losing 36fps at 1440p (compared to TAA) sounds terrible, but you wouldn’t even see the difference on a 144Hz monitor. And you’d have to really, really squint to see it on a 240Hz screen. That said, results will vary according to your own hardware setup, and if you’re getting by with an older, lower-spec RTX GPU – the RTX 2060, for instance – then I wouldn’t blame you for peering more keenly at that performance gap between DLAA and DLSS. The 4K results, in particular, are a convincing argument for having your AI anti-aliasing served with a side of upscaling.The dab of extra detail with DLAA is nice but I’m not sure it’s worth more than a 50% speed boost, y’know? As such, DLSS is definitely still the king of Nvidia graphics wizardry: the kind you’d buy a GeForce graphics card for specifically (apologies to FSR 2.0). DLAA is neat, and can most certainly be worth switching if you’d got the performance headroom – it’s just not as much of a must-have feature, especially when so few games have built-in support for it. Speaking of which, I’m wondering if game developers (and Nvidia) are missing a trick here. Other than The Elder Scrolls Online, all of the currently supported games are quite recent releases. Understandable, given DLAA is a new-ish technology, but would it not make even more sense to add it to older games? These would be easier to run than contemporary releases, thus giving GPUs more performance headroom to spare, and the extra detail reproduction could be like a fresh coat of paint on aging visuals. Just a thought.